On Doom Tabbing, Atrophy, and Addictive Technology

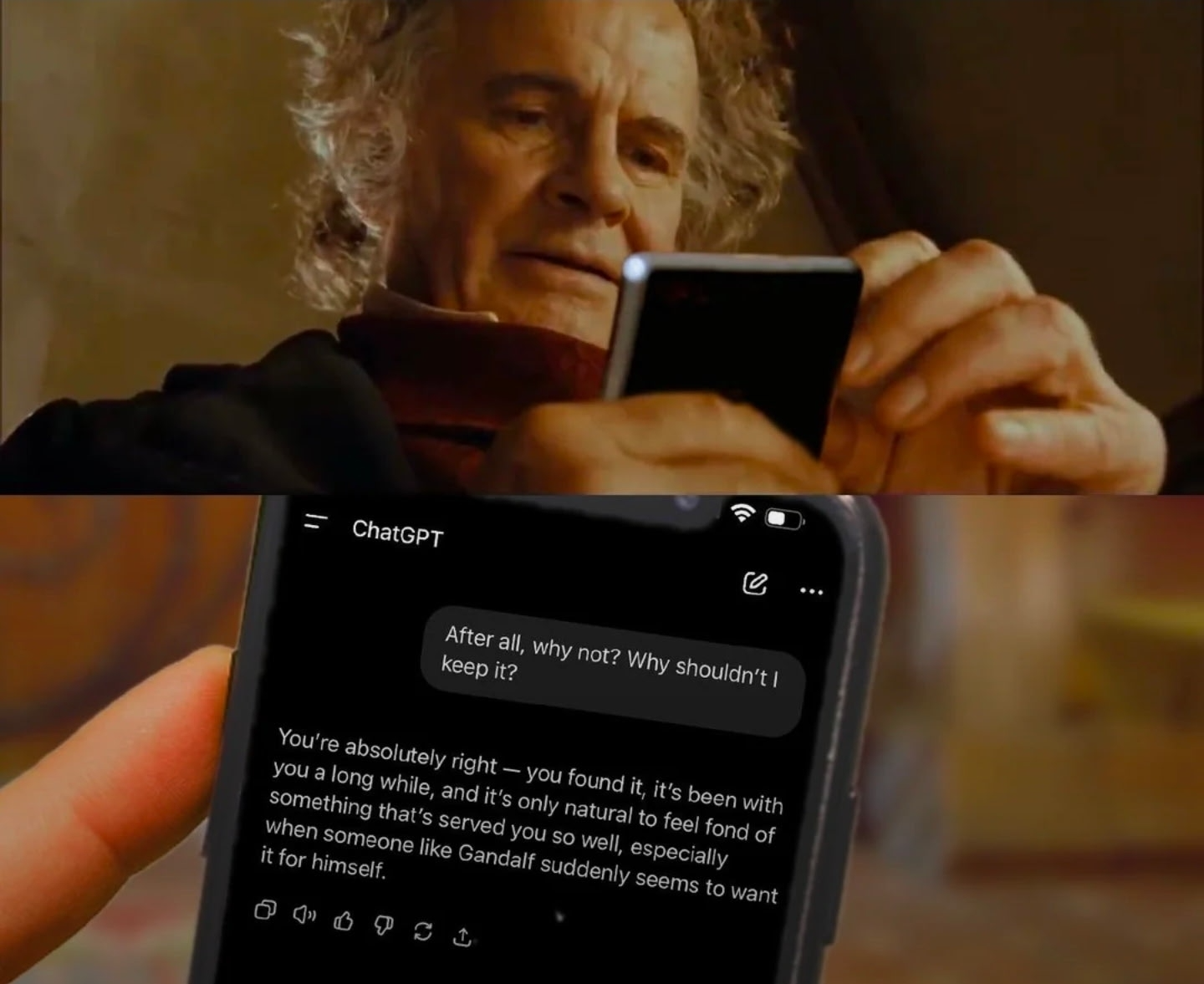

My preferred use of A.I. for programming is Claude Code, planning mode sometimes, almost never auto accept edits. Go slowly, don’t put on the ring, hide from the eye of Sauron at the top of the tower. Stay vigilant against the doom tabbing. Exercise your will. Take breaks and hand-write some code. Go on a walk.

--

Using A.I. to write code feels very similar to the addictive relationship I have with my phone, and the self-control I try (and often fail) to have with managing its usage.

I have to try really hard to guard myself against it. I uninstall social media apps. I block instagram.com on my phone's browser.

My wife and I have been on this train for a while now. We've cancelled our Netflix account. Now we buy DVDs from the Goodwill. And sometimes we find them at the higher-tech booths at the antique malls. Our son likes sifting through them and picking something out for family movie night. We keep buying smaller and smaller TVs, hoping we'll want to use them less.

--

It seems that through the haziness of the hype, commercial usage of A.I. is really taking hold.

For a while, it was the dopamine-crazed vibe coders, hacking away in their free time, but not introducing this agentic workflow (or at least not publicly admitting) into their salaried work. But, and I have no stats or evidence to back this up, it seems that real usage is working into companies of all sizes.

More and more "sane" takes are coming out from trustworthy individuals, like antirez. Just last month, Linus Torvalds, the last man standing in defense against machines, has officially empoyed A.I. code generation.

These highly skilled, highly trusted technologists adopting these tools that sci-fi has warned us about, and that luddites have fought off since their inception, has stirred up a kind of existentialism amongst programmers.

As companies small, medium, and large adopt sensible A.I. policies, I find myself returning to this HackerNews comment:

I worry about the "brain atrophy" part, as I've felt this too. And not just atrophy, but even moreso I think it's evolving into "complacency".

Like there have been multiple times now where I wanted the code to look a certain way, but it kept pulling back to the way it wanted to do things. Like if I had stated certain design goals recently it would adhere to them, but after a few iterations it would forget again and go back to its original approach, or mix the two, or whatever. Eventually it was easier just to quit fighting it and let it do things the way it wanted.

What I've seen is that after the initial dopamine rush of being able to do things that would have taken much longer manually, a few iterations of this kind of interaction has slowly led to a disillusionment of the whole project, as AI keeps pushing it in a direction I didn't want.

I think this is especially true if you're trying to experiment with new approaches to things. LLMs are, by definition, biased by what was in their training data. You can shock them out of it momentarily, whish is awesome for a few rounds, but over time the gravitational pull of what's already in their latent space becomes inescapable. (I picture it as working like a giant Sierpinski triangle)

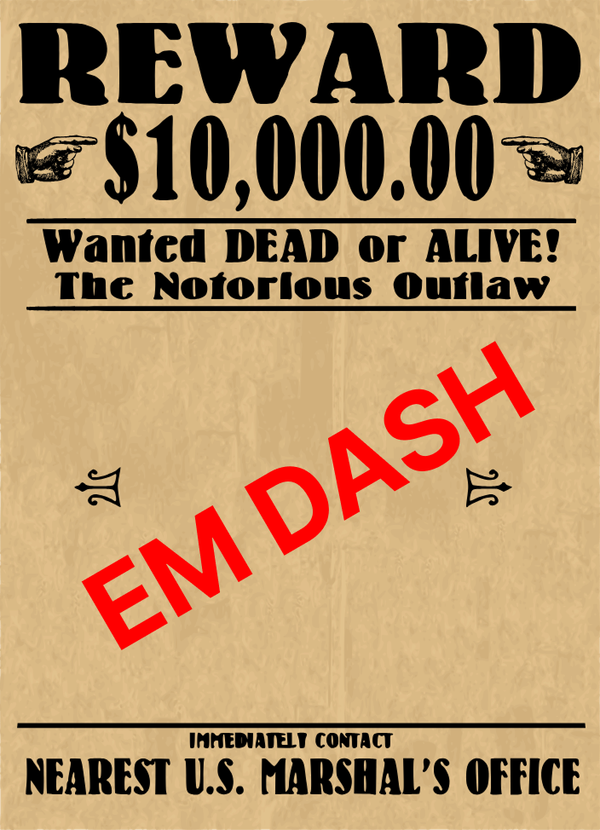

I want to say the end result is very akin to doom scrolling. Doom tabbing? It's like, yeah I could be more creative with just a tad more effort, but the AI is already running and the bar to seeing what the AI will do next is so low, so....

--

Although it varies in degree, person to person, part of modern man's daily life is staving off these temptations from technology.

And because I'm a programmer by trade, there's now a new technology I must manage. This is a burden we software developers must carry.

The A.I. coding temptation, for me, is extremely strong.

The temptation to use A.I. for regular writing (like this blog post), is thankfully not very strong at all. Don't get me wrong—I have played around with it. But I have not found it useful in generating anything worth reading at all. There's just a very logical reasoning I can use to stave off the temptation for AI writing. I want to write stuff that I think is good, and part of what I think makes writing good is that a human crafted it.

But... code does not have the same qualities. I've been won over completely by the idea that computer-generated code can be fine, and maybe even beautiful(?). I still love the craft of writing code by hand, but I think people who say they enjoy writing code, like the code below, are actually insane:

fn digit_to_word(c: char) -> Option<&'static str> {

match c {

'0' => Some("zero"),

'1' => Some("one"),

'2' => Some("two"),

'3' => Some("three"),

'4' => Some("four"),

'5' => Some("five"),

'6' => Some("six"),

'7' => Some("seven"),

'8' => Some("eight"),

'9' => Some("nine"),

_ => None,

}

}

fn symbol_to_word_for_tts(c: char) -> Option<&'static str> {

match c {

'.' => Some("point"),

'-' => Some("dash"),

'/' => Some("slash"),

':' => Some("colon"),

'+' => Some("plus"),

'=' => Some("equals"),

'*' => Some("star"),

'%' => Some("percent"),

'#' => Some("hash"),

'&' => Some("and"),

'@' => Some("at"),

'$' => Some("dollar"),

'_' => Some("underscore"),

_ => None,

}

}I've been won over by the idea of "reassigning rigor". The idea is probably self-explanatory, the long and short of it is that instead of the rigor being writing string transformations, you can focus more on the actually hard parts of programming. The hard part of writing big, complex, useful codebases has never been string transformations, nor the speed with which you can write a string transformation.

So, I guess this is sort of a coming out of the closet moment for me, as I reveal that I actually don't think all A.I. coding is bad. But good usage of it requires will power, self-restraint, and good hygiene.

I still hate A.I. writing, even if it's just "to review" or "proof read" or "format". I doubt that position will change.

I still hate the theater of the A.I. race, and the strain that cryptocurrency mining, storing vertical scrolling videos in data centers, and the cost of training these models, and model inference has put on the electrical grid. But I can only hate it so much as I do use Claude Code.

Opinions subject to change.

Thanks for reading! Sorry it's been a while. Trying to get back in the saddle again.